RedHat7.3 RHCS ORALCE 12c HA实验测试操作手册

一、 安装前准备

以下是利用虚拟机做的测试环境,实际上应放在实体机上。装的是Oracle 单机版 + Red Hat 的HA解决方案,而非Oracle RAC。

| 主机 | IP地址 | 功能 |

| sdb1 | 172.26.1.211 | 主机节点一 |

| sdb2 | 172.26.1.212 | 主机节点二 |

| sdb-vip | 172.26.1.214 | 集群VIP地址 |

| sdbh1 | 192.168.1.211 | 主机节点一心跳 |

| sdbh2 | 192.168.1.212 | 主机节点二心跳 |

| db-iscsi | 172.26.1.213 | iscsi储存、NTP服务器、YUM服务器 |

- 操作系统安装与设定

- Server with GUI,其他均不勾选

- 防火墙设定:关闭

- SELINUX设定,禁用

- 主机名设定

- 内部DNS设定

- 网络设定

- NTP服务设定

- 节点间无密钥访问设定

- YUM源配置设定

| # cat /etc/redhat-release |

| # systemctl disable firewalld |

| #vi /etc/selinux/config |

| # hostnamectl set-hostname sdb1 |

| # cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4 ::1 localhost localhost.localdomain localhost6 localhost6.localdomain6 172.26.1.211 sdb1 172.26.1.212 sdb2 172.26.1.214 sdb-vip 192.168.1.211 sdbh1 192.168.1.212 sdbh2 |

| # systemctl stop NetworkManager

# systemctl disable NetworkManager |

| #Vi /etc/ntp.conf |

| # ssh-keygen -t rsa

# ssh-copy-id -i .ssh/id_rsa.pub root@172.26.1.212 |

| # cat /etc/yum.repos.d/base.repo

[local-yum] name=rhel7 baseurl=http://172.26.1.213/rhel/media/ enabled=1 gpgcheck=0 [Cluster] name=Red Hat Enterprise Linux $releasever - $basearch - Debug baseurl=http://172.26.1.213/rhel/media/addons/HighAvailability/ enabled=1 gpgcheck=0 [ClusterStorage] name=Red Hat Enterprise Linux $releasever - $basearch - Debug baseurl=http://172.26.1.213/rhel/media/addons/ResilientStorage/ enabled=1 gpgcheck=0 |

二、 集群配置

所有节点都要运行

| #yum install -y fence-agents-all corosync pacemaker pcs |

| # echo "Manager1" | passwd hacluster --stdin

# systemctl start pcsd.service # systemctl enable pcsd.service |

利用hacluster使用者对集群做验证,其凭证会存在/var/lib/pcsd/tokens

| # pcs cluster auth sdbh1 sdbh2

Username: hacluster Password: sdbh1: Authorized sdbh2:: Authorized |

A.设置集群名称为sdb、其凭证设定超时时间为10秒加入时间为100毫秒

| # pcs cluster setup --name sdb sdbh1 sdbh2 --token 10000 --join 100 |

B.启动集群并设置开机启动

| #pcs cluster start --all |

| # pcs cluster enable --all |

- 检查集群节点通讯是否异常

| # corosync-cfgtool -s |

| # corosync-cmapctl | grep members |

| # pcs status corosync |

- 确认两节点Quroum设定正常

| # corosync-quorumtool -s |

| pcs stonith create sdbf1 fence_ipmilan pcmk_host_list=" sdbh1" ipaddr="172.26.1.112" login="admin" passwd="Manager1" lanplus=1 power_wait=4 |

| pcs stonith create sdbf2 fence_ipmilan pcmk_host_list=" sdbh2" ipaddr="172.26.1.116" login="admin" passwd="Manager1" lanplus=1 power_wait=4 |

- 检视集群的状态

| # pcs status |

| pcs -f stonith_cfg stonith create my_vcentre-fence fence_vmware_soap \

ipaddr=172.26.1.115 ipport=443 ssl_insecure=1 inet4_only=1 \ login="root" passwd="P@ssw0rd" \ action=reboot \ pcmk_host_map="sdbh1:sdb1;sdbh2r:sdb2" \ pcmk_host_check=static-list \ pcmk_host_list="sdb1,sdb2" \ power_wait=3 op monitor interval=60s |

| pcs stonith create sdbf2 fence_ipmilan pcmk_host_list="sdb2" ipaddr="172.26.1.212" login="admin" passwd="Manager1" lanplus=1 power_wait=4

pcs resource create hfbr2r-vip ocf:heartbeat:IPaddr2 ip=10.73.17.84 cidr_netmask=24 op monitor interval=30s

|

| # yum -y install targetcli.noarch |

| #mkdir /iscsi_disks |

| # targetcli |

| Warning: Could not load preferences file /root/.targetcli/prefs.bin.

targetcli shell version 2.1.fb41 Copyright 2011-2013 by Datera, Inc and others. For help on commands, type 'help'.

/> cd /backstores/fileio /backstores/fileio> create disk01 /iscsi_disk/sdb.img 250G Created fileio disk01 with size 268435456000 /backstores/fileio> cd /iscsi /iscsi> create iqn.2020-06.db.com:storage-target Created target iqn.2020-06.db.com:storage-target. Created TPG 1. Global pref auto_add_default_portal=true Created default portal listening on all IPs (0.0.0.0), port 3260. /iscsi> cd iqn.2020-06.db.com:storage/tpg1/portals/ /iscsi/iqn.20.../tpg1/portals> create172.26.1.213 Using default IP port 3260 Could not create NetworkPortal in configFS /iscsi/iqn.20.../tpg1/portals> delete 0.0.0.0 3260 Deleted network portal 0.0.0.0:3260 /iscsi/iqn.20.../tpg1/portals> create172.26.1.213 Using default IP port 3260 Created network portal 172.26.1.200:3260. /iscsi/iqn.20.../tpg1/portals> cd ../luns /iscsi/iqn.20...et0/tpg1/luns> create /backstores/fileio/disk01 Created LUN 0. /iscsi/iqn.20...get/tpg1/luns> create /backstores/fileio/disk02 Created LUN 1. /iscsi/iqn.20...et0/tpg1/luns> cd ../acls /iscsi/iqn.20...et0/tpg1/acls> create iqn.2020-06.db.com:server Created Node ACL for iqn.2020-06.db.com:server Created mapped LUN 0. /iscsi/iqn.20...et0/tpg1/acls> cd iqn.2020-06.db.com:server / /iscsi/iqn.20...ww.server.com> set auth userid=username Parameter userid is now 'username'. /iscsi/iqn.20...ww.server.com> set auth password=password Parameter password is now 'password'. /iscsi/iqn.20...ww.server.com> exit Global pref auto_save_on_exit=true Last 10 configs saved in /etc/target/backup. Configuration saved to /etc/target/saveconfig.json |

| # systemctl enable target.service |

| # systemctl start target.service |

| # vi /etc/iscsi/initiatorname.iscsi

InitiatorName= iqn.2020-06.db.com:server |

| # vi /etc/iscsi/iscsid.conf

#node.session.auth.authmethod = CHAP #node.session.auth.username = username #node.session.auth.password = password //去掉注释 |

| #systemctl start iscsid |

| #systemctl enable iscsid |

| # iscsiadm -m discovery -t sendtargets -p 172.26.1.213 |

| # iscsiadm -m node -o show |

| # iscsiadm -m node --login |

| # iscsiadm -m session -o show |

| # fdisk -l |

| # iscsiadm -m node -T TARGET -p IP:port -u //登出iSCSI存储 |

| # iscsiadm -m node -T TARGET -p IP:port -l //登录iSCSI存储 |

| #iscsiadm -m node -o delete -T TARGET -p IP:port //删除iSCSI发现记录 |

A.确认主机与存储已连接

| # lsscsi

[2:0:0:0] disk VMware Virtual disk 2.0 /dev/sda [3:0:0:0] cd/dvd NECVMWar VMware SATA CD00 1.00 /dev/sr0 [33:0:0:0] disk LIO-ORG disk01 4.0 /dev/sdb [33:0:0:1] disk LIO-ORG disk02 4.0 /dev/sdc |

- 安装配置多路径软件

| # yum install device-mapper-multipath -y |

| # cp /usr/share/doc/device-mapper-multipath-0.4.9/multipath.conf /etc/ |

| # /usr/lib/udev/scsi_id --whitelisted --replace-whitespace --device=/dev/sdb

36001405202779ad9243419580402d157 |

| # /usr/lib/udev/scsi_id --whitelisted --replace-whitespace --device=/dev/sdc

360014056d485455a1d54c6fac1c41d9b |

| # cat /etc/multipath.conf

defaults{ polling_interval 10 path_selector "round-robin 0" path_grouping_policy multibus path_checker readsector0 rr_min_io 100 max_fds 8192 rr_weight priorities failback immediate no_path_retry fail user_friendly_names yes } multipaths { multipath { wwid 36001405202779ad9243419580402d157 alias oradata } multipath { wwid 360014056d485455a1d54c6fac1c41d9b alias sbd }

} |

| # systemctl start multipathd.service

# systemctl enable multipathd.service |

| # parted -a optimal /dev/mapper/oradata

--mklabel gpt mkpart primary ext4 0% 100% |

| # ls -l /dev/mapper/oradata |

| #kpartx -a /dev/mapper/oradata |

| # gdisk /dev/mapper/oradata

GPT fdisk (gdisk) version 0.8.6

Partition table scan: MBR: protective BSD: not present APM: not present GPT: present

Found valid GPT with protective MBR; using GPT.

Command (? for help): t Using 1 Current type is 'Microsoft basic data' Hex code or GUID (L to show codes, Enter = 8300): L 0700 Microsoft basic data 0c01 Microsoft reserved 2700 Windows RE 4200 Windows LDM data 4201 Windows LDM metadata 7501 IBM GPFS 7f00 ChromeOS kernel 7f01 ChromeOS root 7f02 ChromeOS reserved 8200 Linux swap 8300 Linux filesystem 8301 Linux reserved 8e00 Linux LVM a500 FreeBSD disklabel a501 FreeBSD boot a502 FreeBSD swap a503 FreeBSD UFS a504 FreeBSD ZFS a505 FreeBSD Vinum/RAID a580 Midnight BSD data a581 Midnight BSD boot a582 Midnight BSD swap a583 Midnight BSD UFS a584 Midnight BSD ZFS a585 Midnight BSD Vinum a800 Apple UFS a901 NetBSD swap a902 NetBSD FFS a903 NetBSD LFS a904 NetBSD concatenated a905 NetBSD encrypted a906 NetBSD RAID ab00 Apple boot af00 Apple HFS/HFS+ af01 Apple RAID af02 Apple RAID offline af03 Apple label af04 AppleTV recovery af05 Apple Core Storage be00 Solaris boot bf00 Solaris root bf01 Solaris /usr & Mac Z bf02 Solaris swap bf03 Solaris backup bf04 Solaris /var bf05 Solaris /home bf06 Solaris alternate se bf07 Solaris Reserved 1 bf08 Solaris Reserved 2 bf09 Solaris Reserved 3 bf0a Solaris Reserved 4 bf0b Solaris Reserved 5 c001 HP-UX data c002 HP-UX service ed00 Sony system partitio ef00 EFI System ef01 MBR partition scheme ef02 BIOS boot partition fb00 VMWare VMFS fb01 VMWare reserved fc00 VMWare kcore crash p fd00 Linux RAID Hex code or GUID (L to show codes, Enter = 8300): 8e00 Changed type of partition to 'Linux LVM'

Command (? for help): w

Final checks complete. About to write GPT data. THIS WILL OVERWRITE EXISTING PARTITIONS!!

Do you want to proceed? (Y/N): y OK; writing new GUID partition table (GPT) to /dev/mapper/oradata. Warning: The kernel is still using the old partition table. The new table will be used at the next reboot. The operation has completed successfully. |

| # partprobe /dev/mapper/oradata |

| #yum install gfs2-utils lvm2-cluster -y |

| # systemctl stop lvm2-lvmetad |

| # lvmconf --enable-cluster

# cat /etc/lvm/lvm.conf |grep locking_type |grep -v "#" |

| #pcs resource create dlm ocf:pacemaker:controld op monitor interval=30s on-fail=fence clone interleave=true ordered=true |

| #pcs resource create clvmd ocf:heartbeat:clvm op monitor interval=30s on-fail=fence clone interleave=true ordered=true |

| # vgcreate -Ay -cy vg_pcmkdb /dev/mapper/oradata --->sdb1上建

# vgs --->sdb2查看 # vgdisplay |

储存必要的档案和元件在每个本地节点而不是共享储存,这样pacemaker的守护进程才可以正确的检查资源并能够正确的存取节点。

| # lvcreate -L 80G -n ora12lv oravg ---sdb1设定 |

| # vgdisplay --->sdb2上查看

# lvs |

加入noatime可以增加GFS2的效能

| # mkfs.gfs2 -p lock_dlm -t sdb:gfs2orac12 -j 2 /dev/oravg/ora12lv |

| #pcs resource create gfs2oradata12 Filesystem device=" /dev/oravg/ora12lv " directory="/oradata12" fstype="gfs2" options="noatime" op monitor interval=10s on-fail=fence clone interleave=true

|

| # df -h |

| pcs resource create sdb-vip ocf:heartbeat:IPaddr2 ip=172.26.1.214 cidr_netmask=24 op monitor interval=30s |

| # pcs status |

| # pcs resource show |

| # pcs resource group add SDB_RESOURCE sdb-vip |

| # pcs resource group list |

<1>让sdb一次只给一个节点挂载并跟着VIP移动

<2>集群资源启动的顺序为vip -> dlm -> clvmd -> gfs2 红色表是GFS2必须的资源顺序设定

其他按需求设定,本次实验是设定都跟着VIP走

<3>集群资源在两个节点随意移动、不限制偏好在哪个节点

| # pcs constraint order start sdb-vip then dlm-clone |

| # pcs constraint order start dlm-clone then clvmd-clone |

| # pcs constraint order start clvmd-clone then gfs2oradata12-clone |

| # pcs constraint colocation add dlm-clone with sdb-vip

# pcs constraint colocation add clvmd-clone with dlm-clone # pcs constraint colocation add gfs2oradata12-clone with clvmd-clone # pcs constraint show --full Location Constraints: Ordering Constraints: start sdb-vip then start dlm-clone (kind:Mandatory) (id:order-sdb-vip-dlm-clone-mandatory) start dlm-clone then start clvmd-clone (kind:Mandatory) (id:order-dlm-clone-clvmd-clone-mandatory) start clvmd-clone then start gfs2oradata12-clone (kind:Mandatory) (id:order-clvmd-clone-gfs2oradata12-clone-mandatory) Colocation Constraints: dlm-clone with sdb-vip (score:INFINITY) (id:colocation-dlm-clone-sdb-vip-INFINITY) clvmd-clone with dlm-clone (score:INFINITY) (id:colocation-clvmd-clone-dlm-clone-INFINITY) gfs2oradata12-clone with clvmd-clone (score:INFINITY) (id:colocation-gfs2oradata12-clone-clvmd-clone-INFINITY) Ticket Constraints:

|

| # pcs status |

预设上,no-quorum-policy 的值是 stop(停止),表示一组「仲裁」(quorum)已经遗失,剩下分割区的所有资源都会立即停止。通常这项预设值是最安全、最佳化的选项;但跟大部分资源不同,GFS2 需要仲裁功能方可运作。若仲裁遗失,那么使用 GFS2 的应用程式与 GFS2 的挂载目录本身都无法正常停止。试图停止没有仲裁的任何尝试都会失败,最后会造成每次仲裁遗失时,整个丛集被隔离。

要解决这问题,您可以在 GFS2 运行时,设定 no-quorum-policy=freeze。这表示仲裁遗失时,在重新获得仲裁之前,剩下的分割区不会采取任何行动。

| # pcs property set no-quorum-policy=freeze |

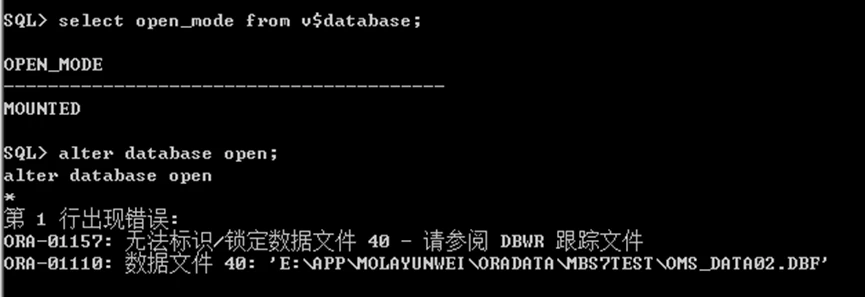

三、 安装Oracle DB

3.1安装前准备

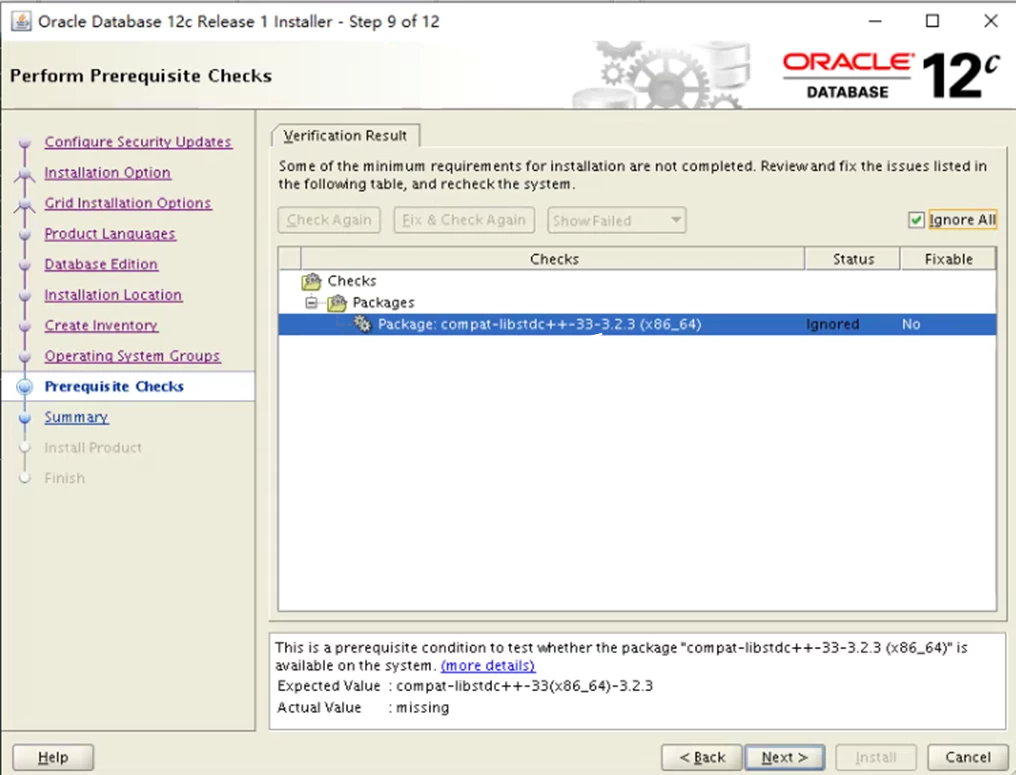

3.1.1安装所需要的依赖性套件

| yum install -y binutils.x86_64 compat-libcap1.x86_64 gcc.x86_64 gcc-c++.x86_64 glibc.i686 glibc.x86_64 \

glibc-devel.i686 glibc-devel.x86_64 ksh compat-libstdc++-33 libaio.i686 libaio.x86_64 libaio-devel.i686 \ libaio-devel.x86_64 libgcc.i686 libgcc.x86_64 libstdc++.i686 libstdc++.x86_64 libstdc++-devel.i686 \ libstdc++-devel.x86_64 libXi.i686 libXi.x86_64 libXtst.i686 libXtst.x86_64 make.x86_64 sysstat.x86_64 \ zip unzip |

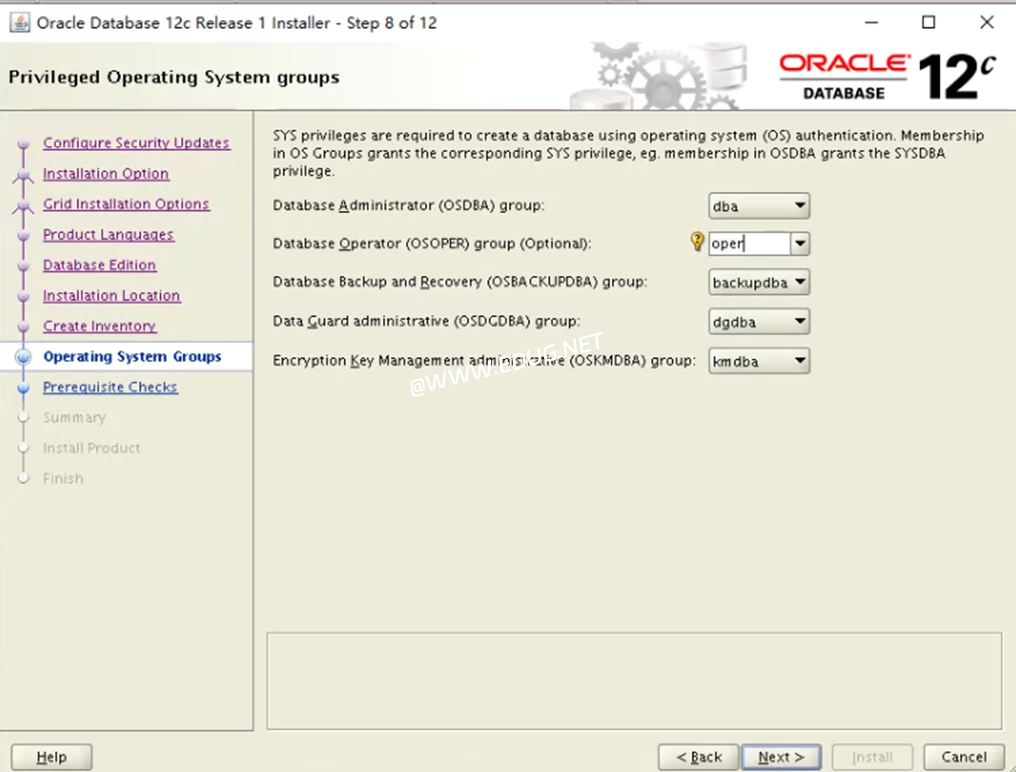

3.1.2创建Oracle用户及用户组

| /usr/sbin/groupadd -g 1001 asmadmin

/usr/sbin/groupadd -g 1002 asmdba /usr/sbin/groupadd -g 1003 asmoper /usr/sbin/groupadd -g 1004 dba /usr/sbin/groupadd -g 1005 oper /usr/sbin/groupadd -g 1006 oinstall /usr/sbin/groupadd -g 1007 backupdba /usr/sbin/groupadd -g 1008 dgdba /usr/sbin/groupadd -g 1009 kmdba /usr/sbin/groupadd -g 1010 racdba /usr/sbin/useradd -u 1001 -g oinstall -G dba,asmdba,backupdba,dgdba,kmdba,racdba,oper oracle12 /usr/sbin/useradd -u 1002 -g oinstall -G dba,asmdba,backupdba,dgdba,kmdba,racdba,oper oracle19 |

3.1.3配置Orcale用户密码

| # passwd oracle12 |

| # passwd oracle19 |

3.1.4配置内核参数

| # vi /etc/sysctl.conf

fs.file-max = 6815744 kernel.sem = 250 32000 100 128 kernel.shmmni = 4096 kernel.shmall = 1073741824 kernel.shmmax = 4398046511104 net.core.rmem_default = 262144 net.core.rmem_max = 4194304 net.core.wmem_default = 262144 net.core.wmem_max = 1048576 fs.aio-max-nr = 1048576 net.ipv4.ip_local_port_range = 9000 65500 |

3.1.5加载设定

| # sysctl -p <加载>

# sysctl -a <检查> |

3.1.6调整Oracle连线数限制

| # vi /etc/security/limits.conf

* soft nofile 1024 * hard nofile 65536 * soft nproc 2047 * hard nproc 16384 * soft stack 10240 * hard stack 32768 |

| # ulimit -a |

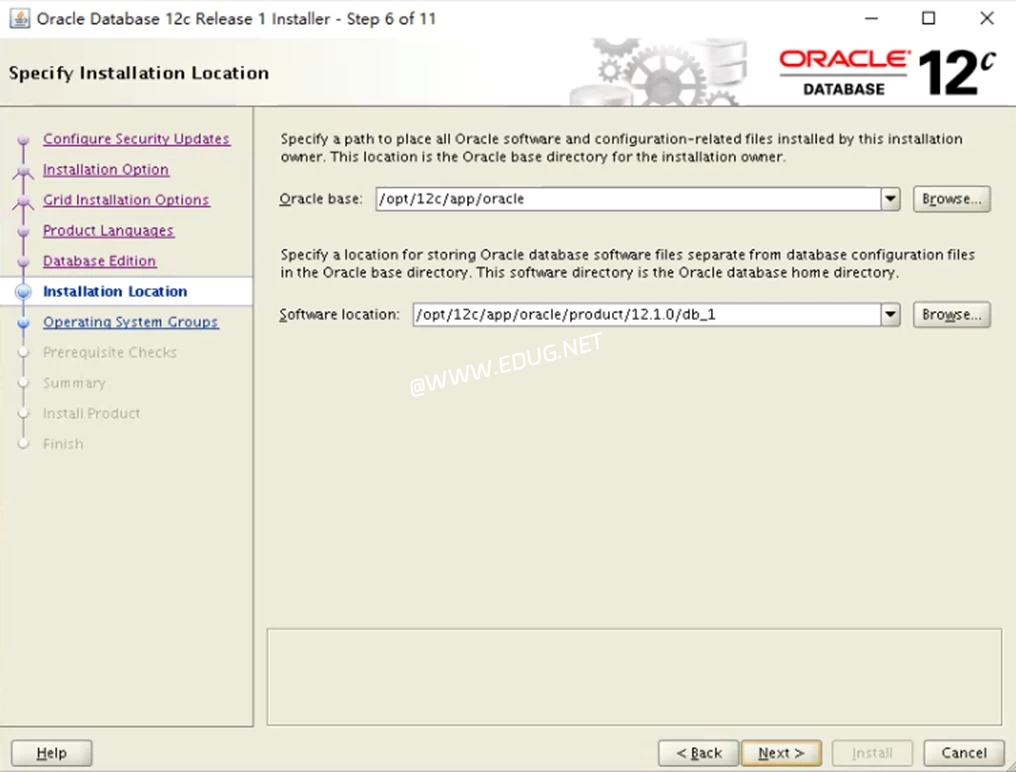

3.1.7创建安装目录

| mkdir -p /opt/12c/app/oracle/product/12.1.0.2/db_1

chown -R oracle12:oinstall /opt/12c chown -R oracle12:oinstall /oradata12/ chmod -R 775 /opt/12c/ |

3.1.8设定Oracle用户环境变量

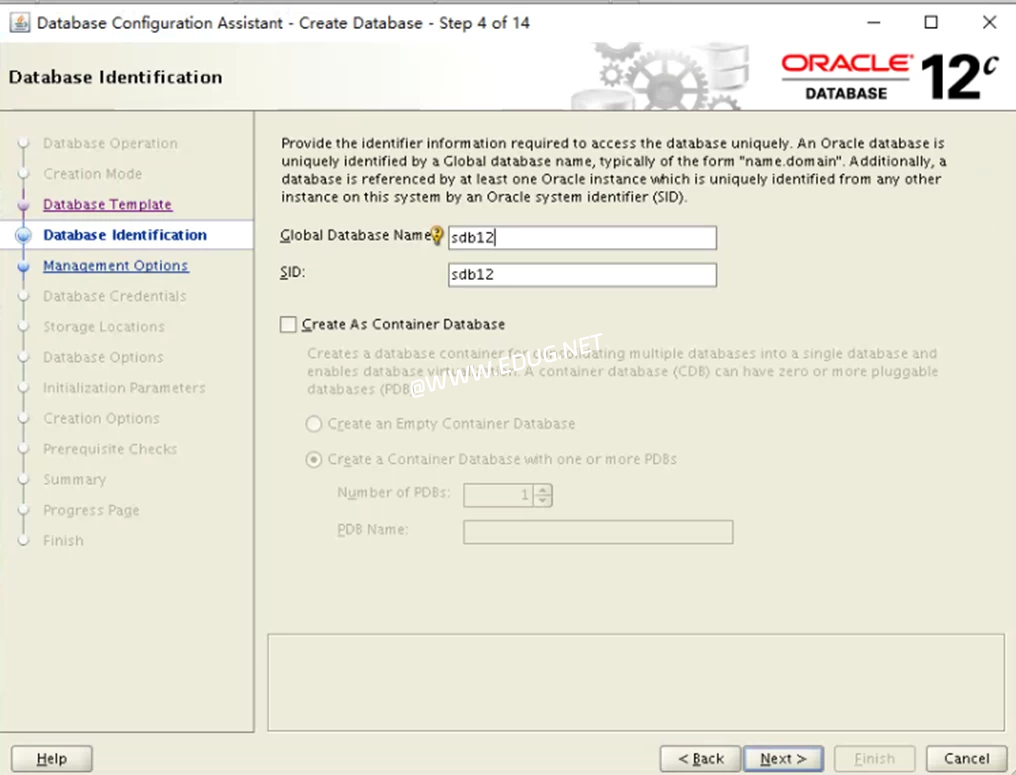

| # vi .bash_profile

export ORACLE_BASE=/opt/12c/app/oracle export ORACLE_HOME=$ORACLE_BASE/product/12.1.0.2/db_1 export ORACLE_SID=sdb12 export ORACLE_UNQNAME=sdb12 export PATH=/usr/sbin:$PATH export PATH=$ORACLE_HOME/bin:$PATH export LD_LIBRARY_PATH=$ORACLE_HOME/lib:/lib:/usr/lib export CLASSPATH=$ORACLE_HOME/jlib:$ORACLE_HOME/rdbms/jlib export TMP=/tmp export TMPDIR=$TMP |

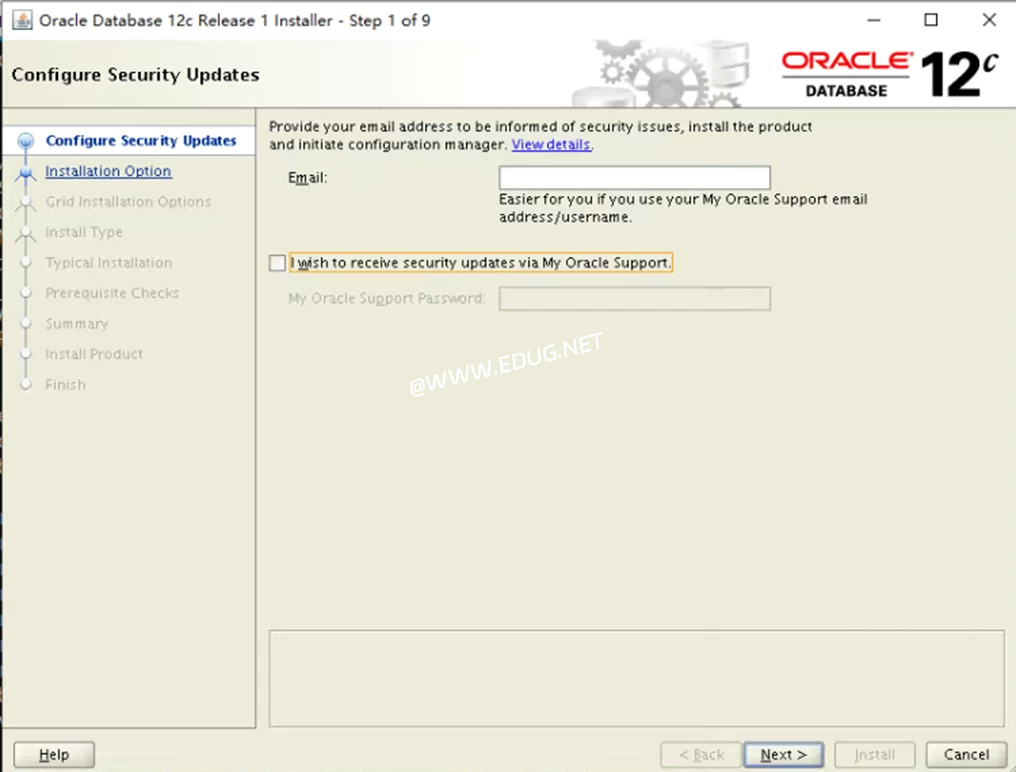

3.2开始安装

3.2.1执行GUI脚本安装

| # unzip linuxamd64_12102_database_1of2.zip

# unzip linuxamd64_12102_database_2of2.zip

|

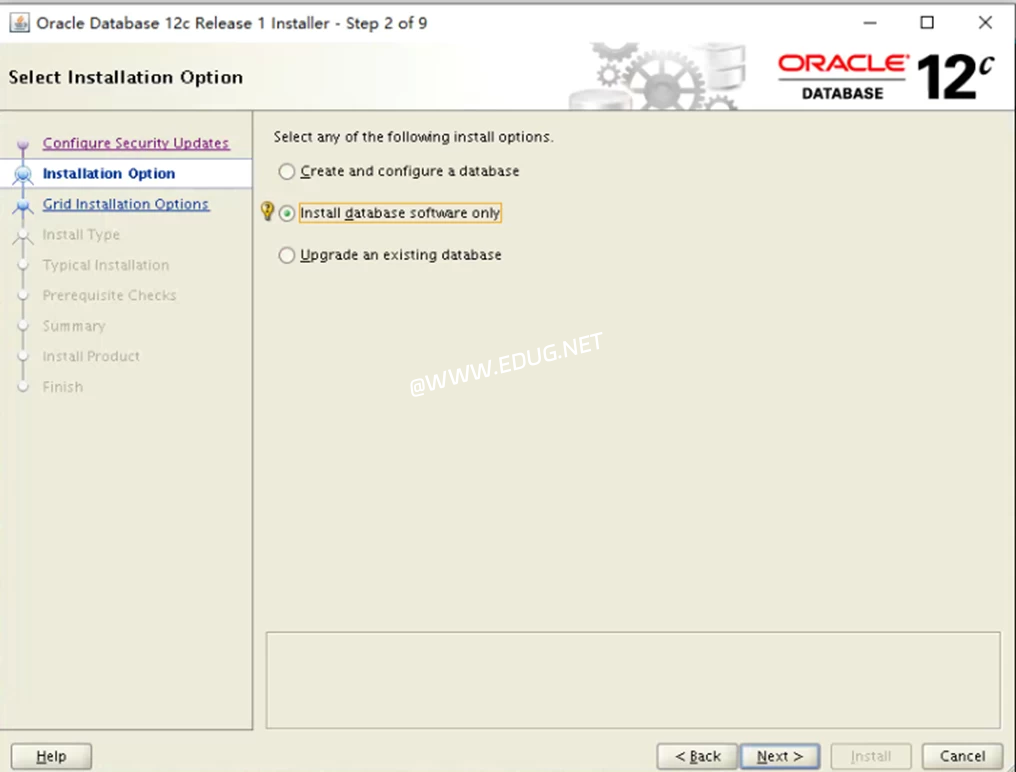

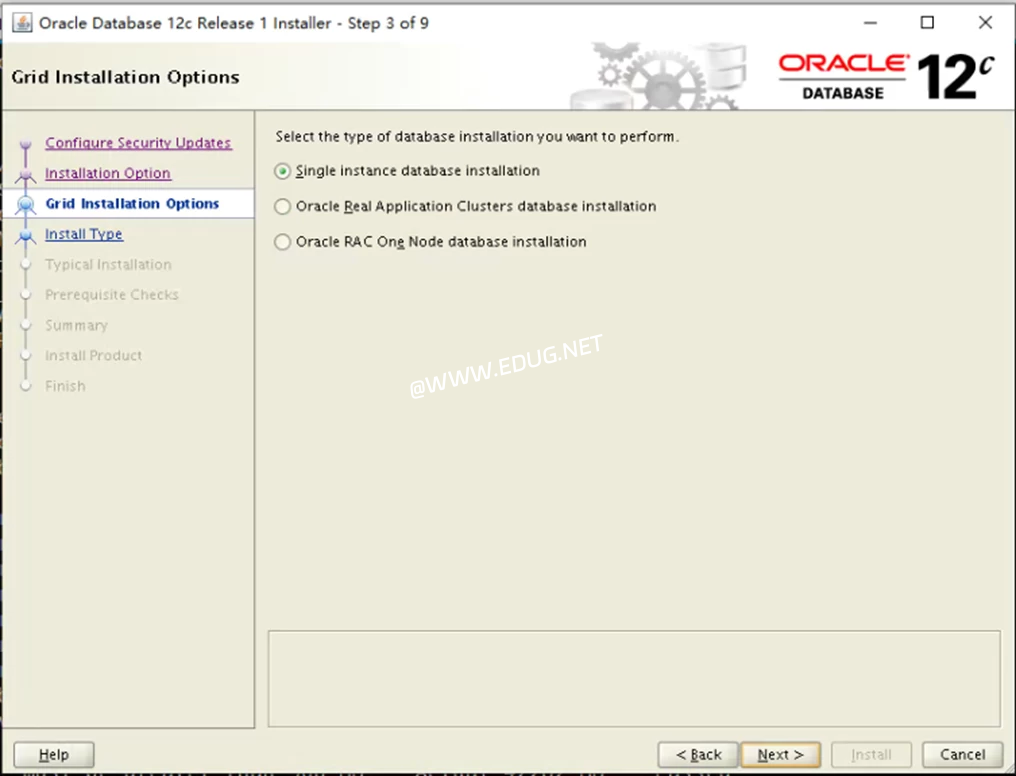

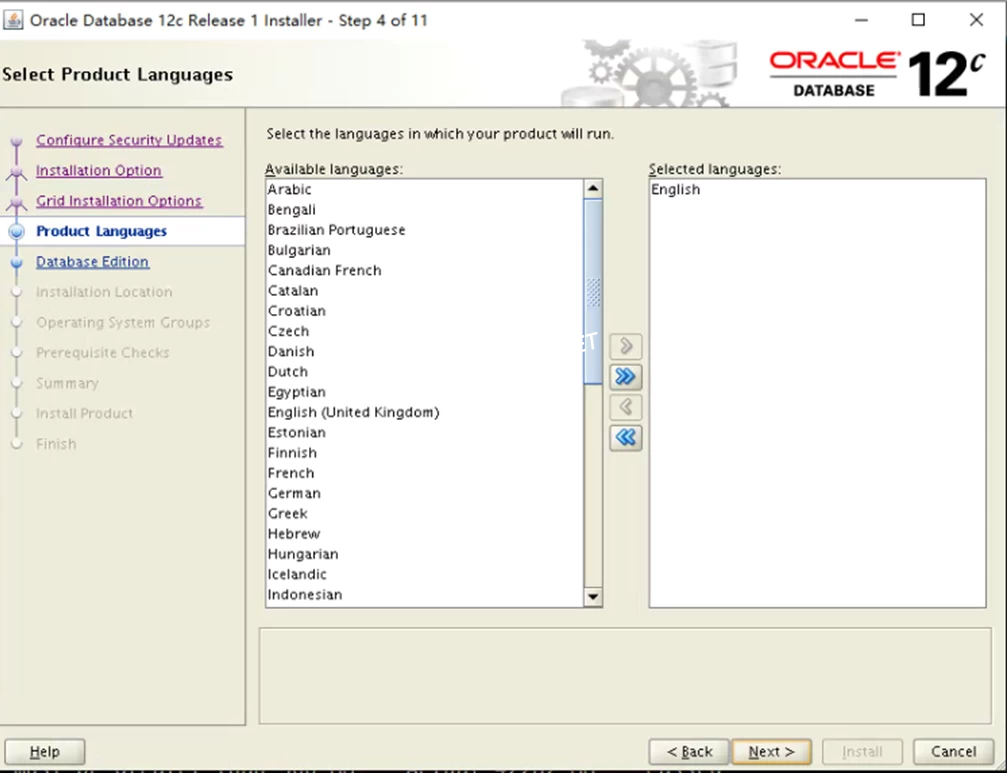

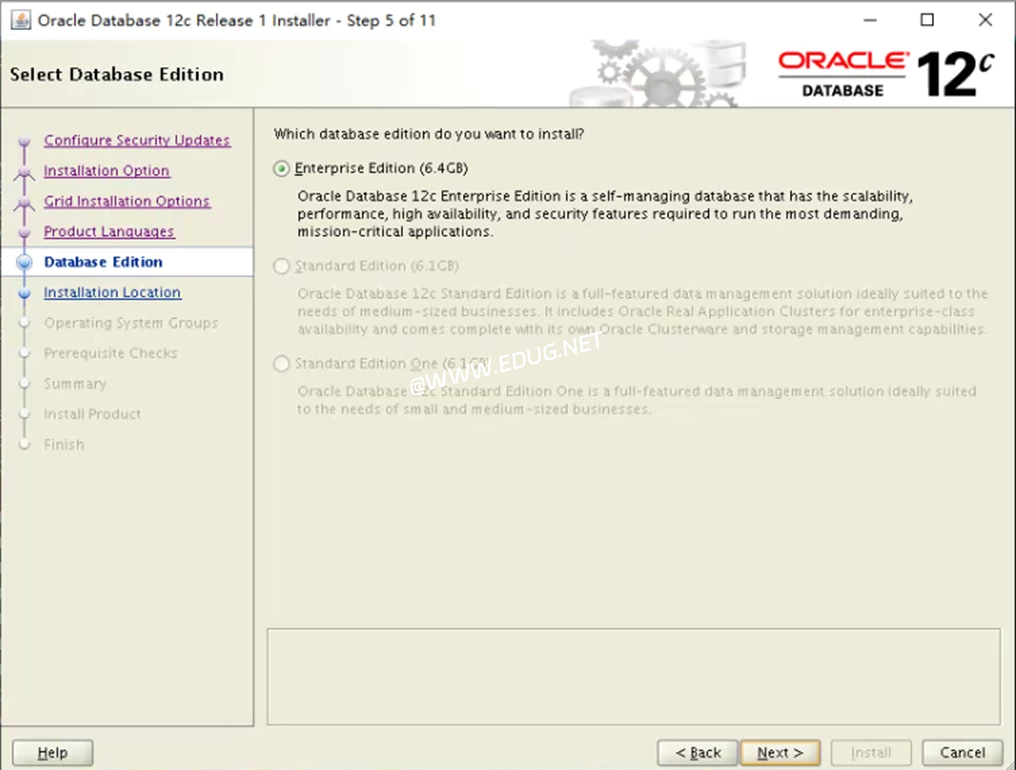

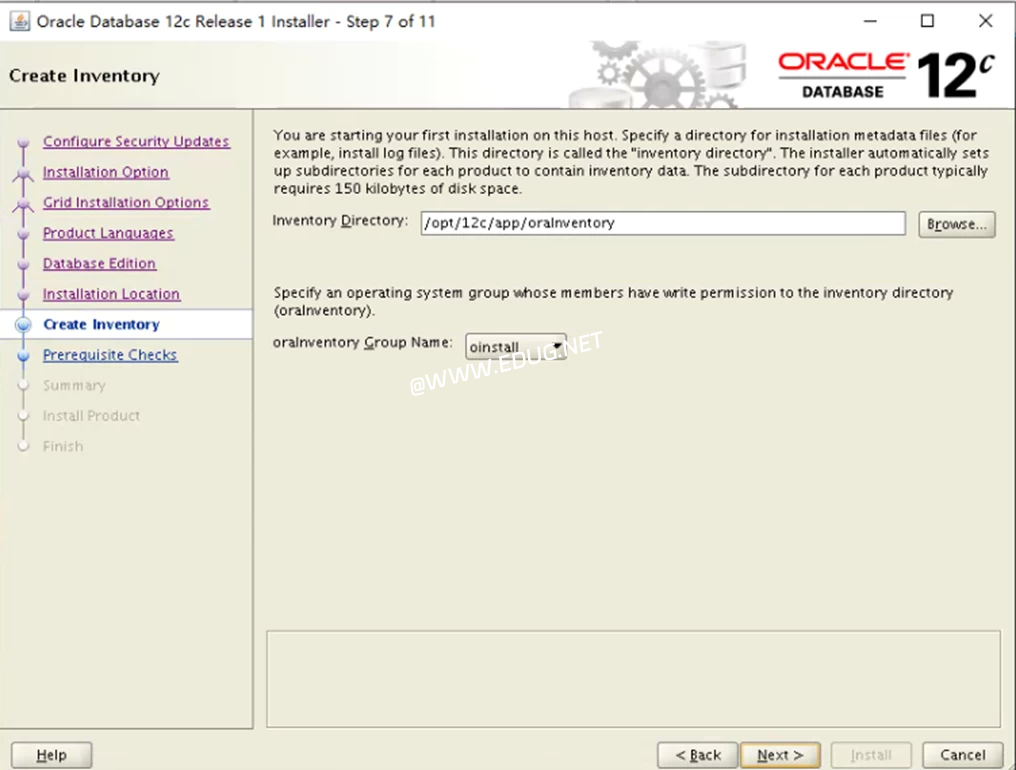

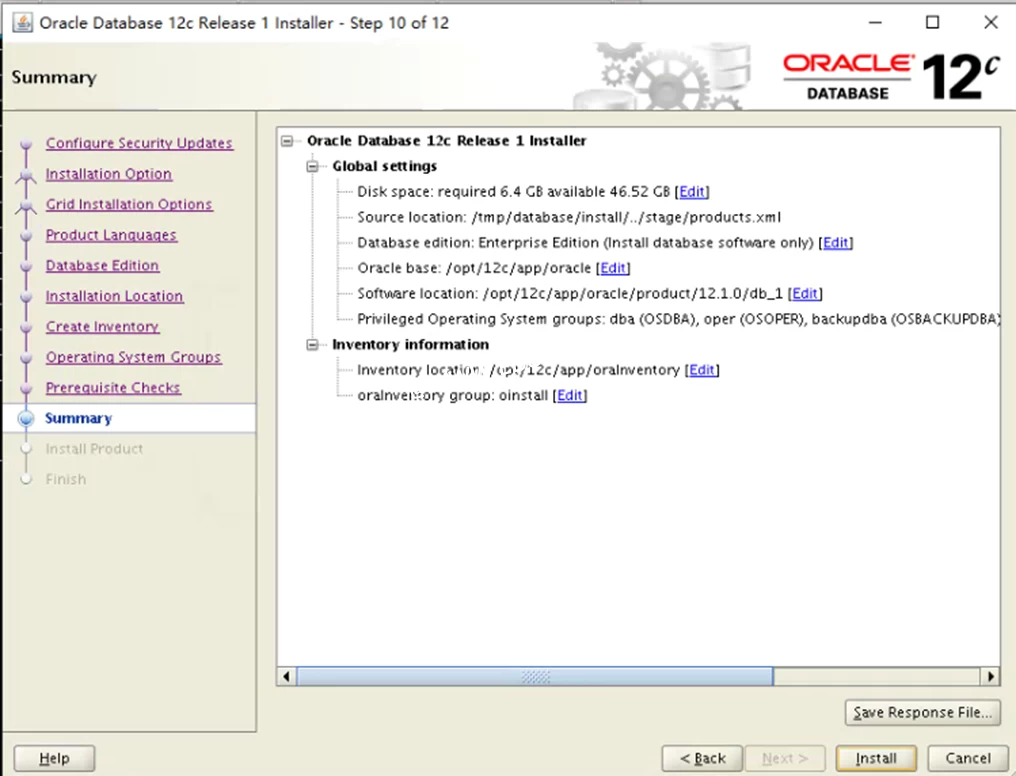

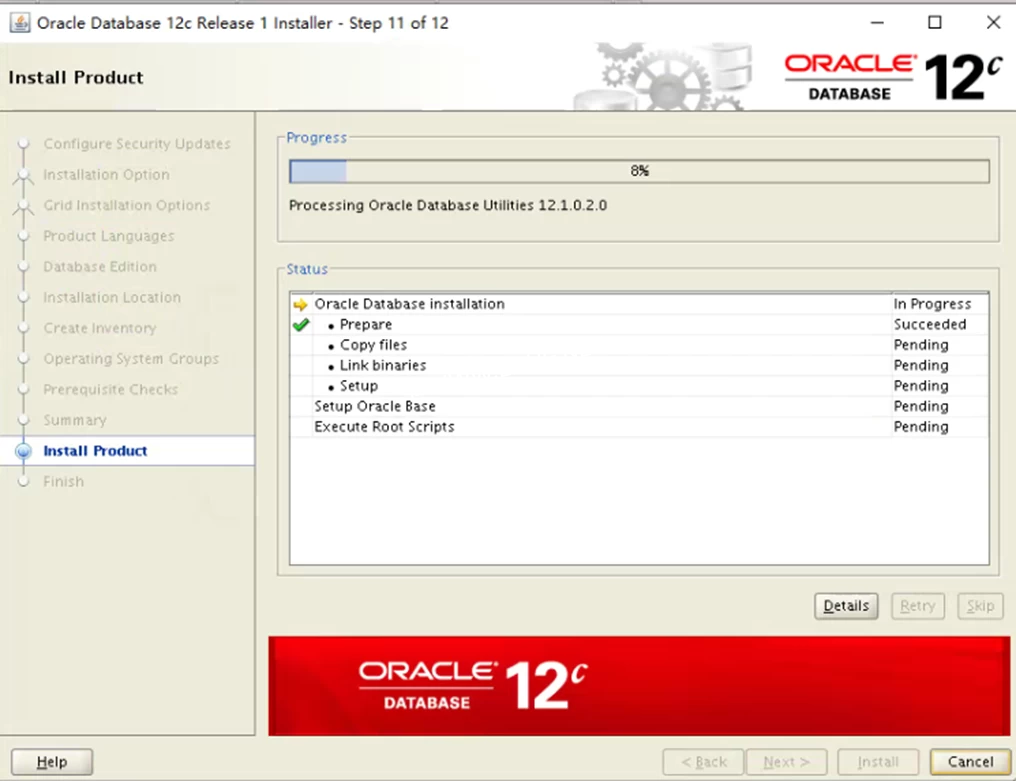

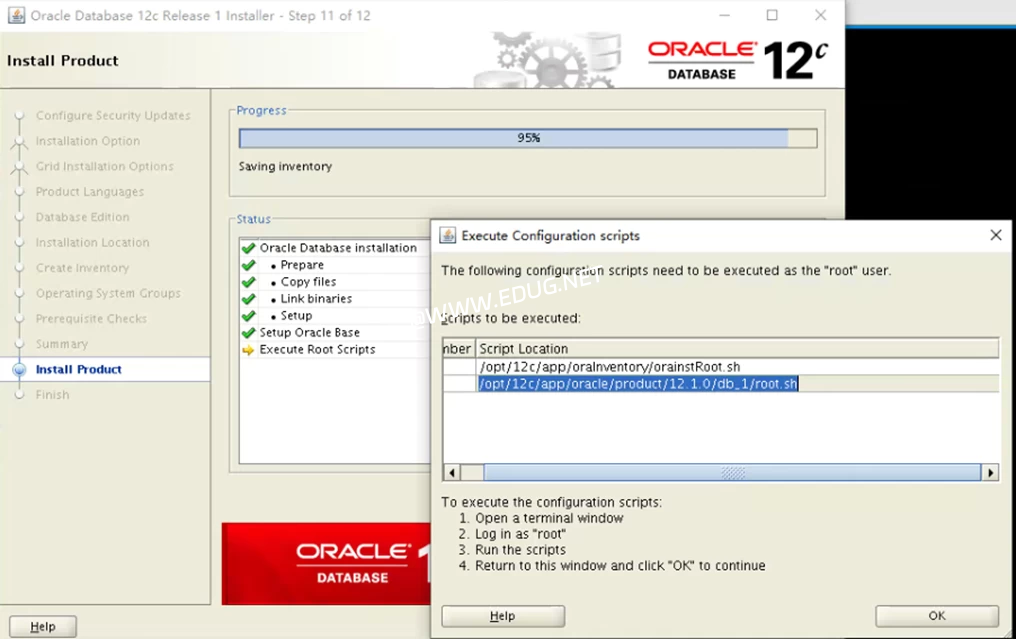

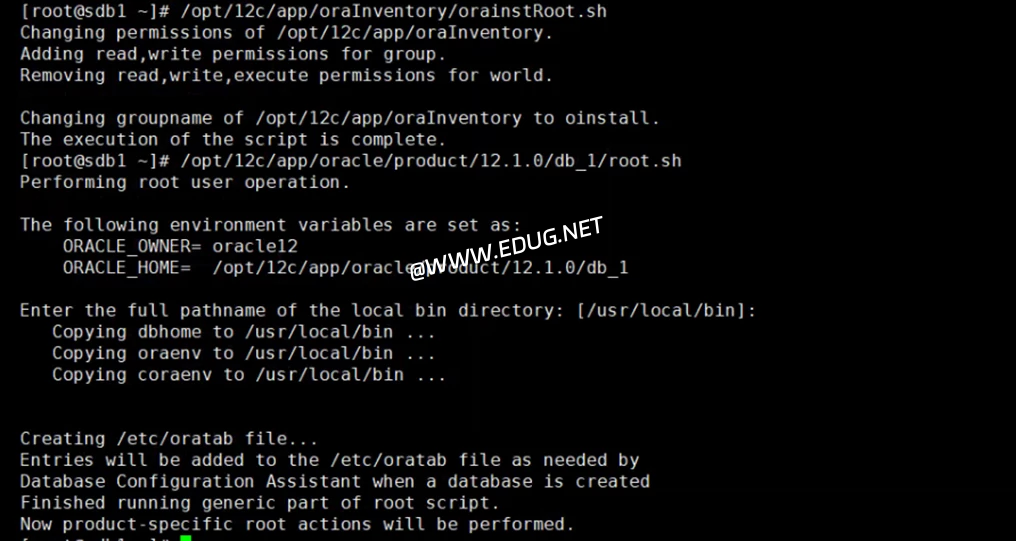

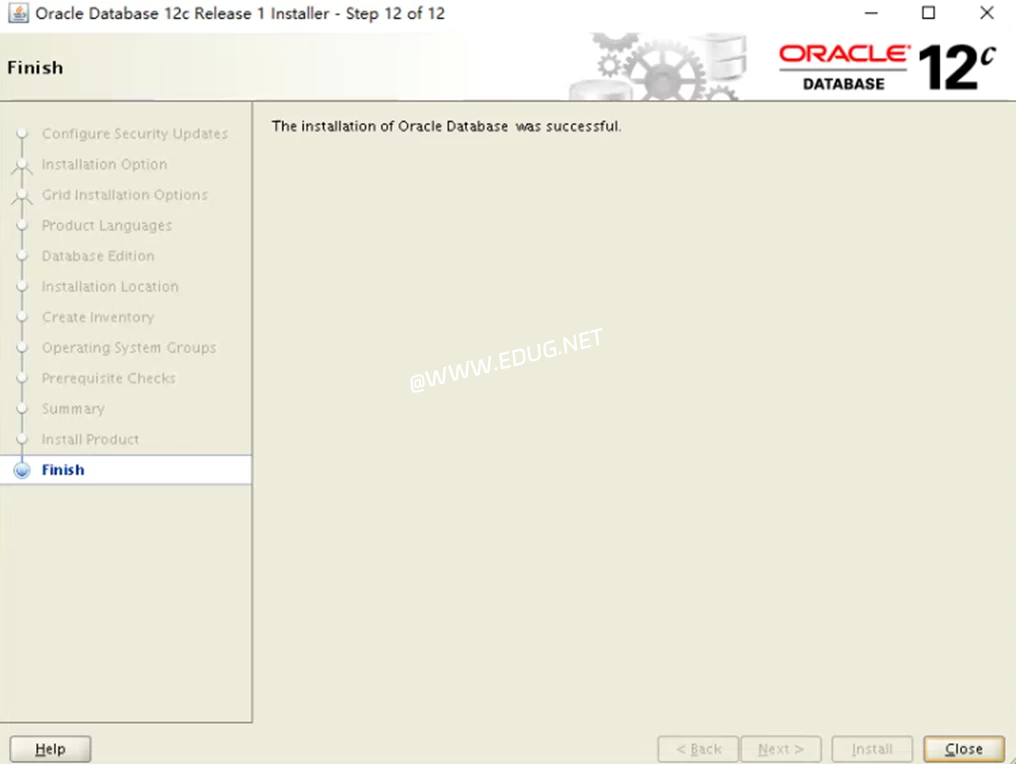

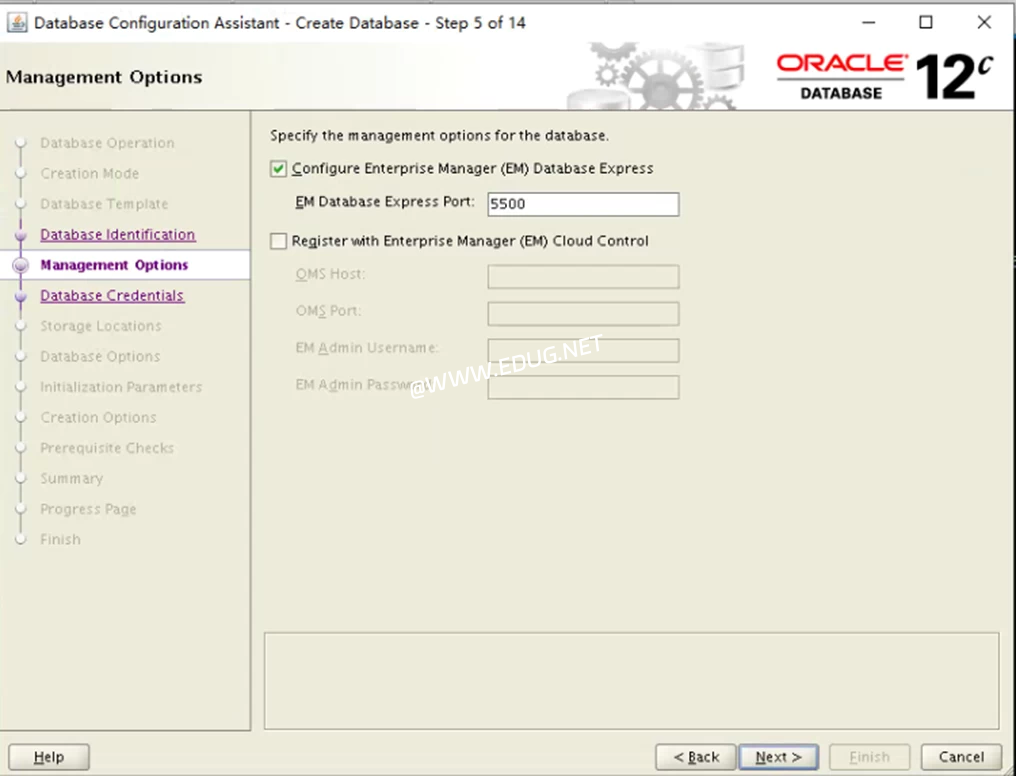

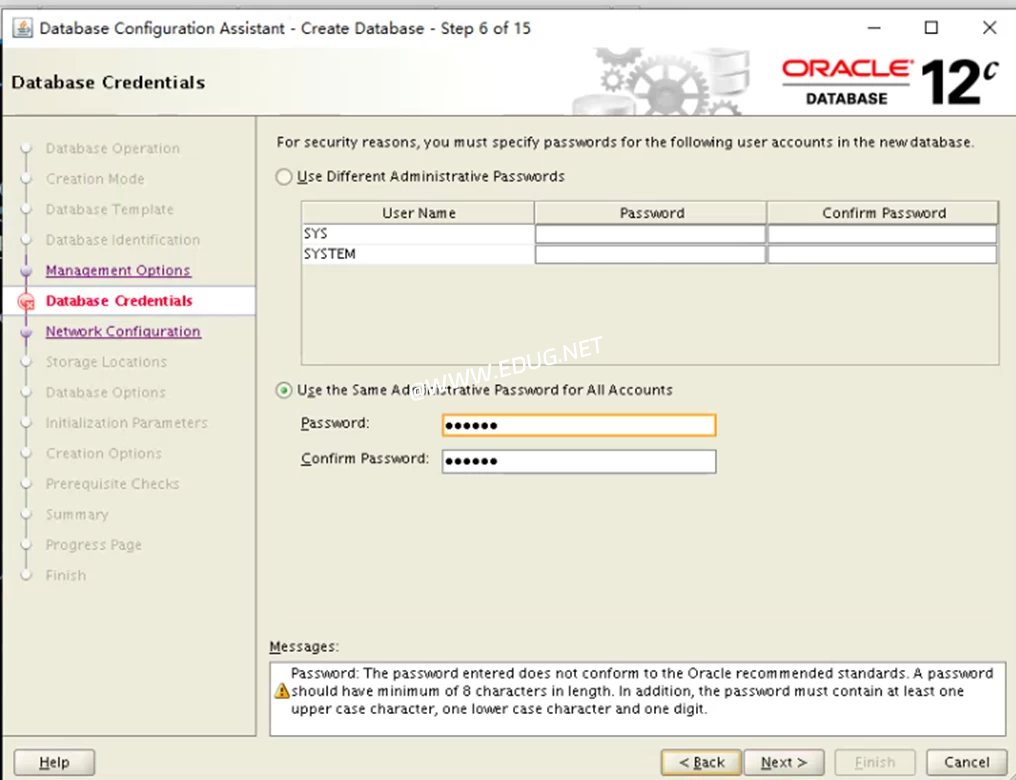

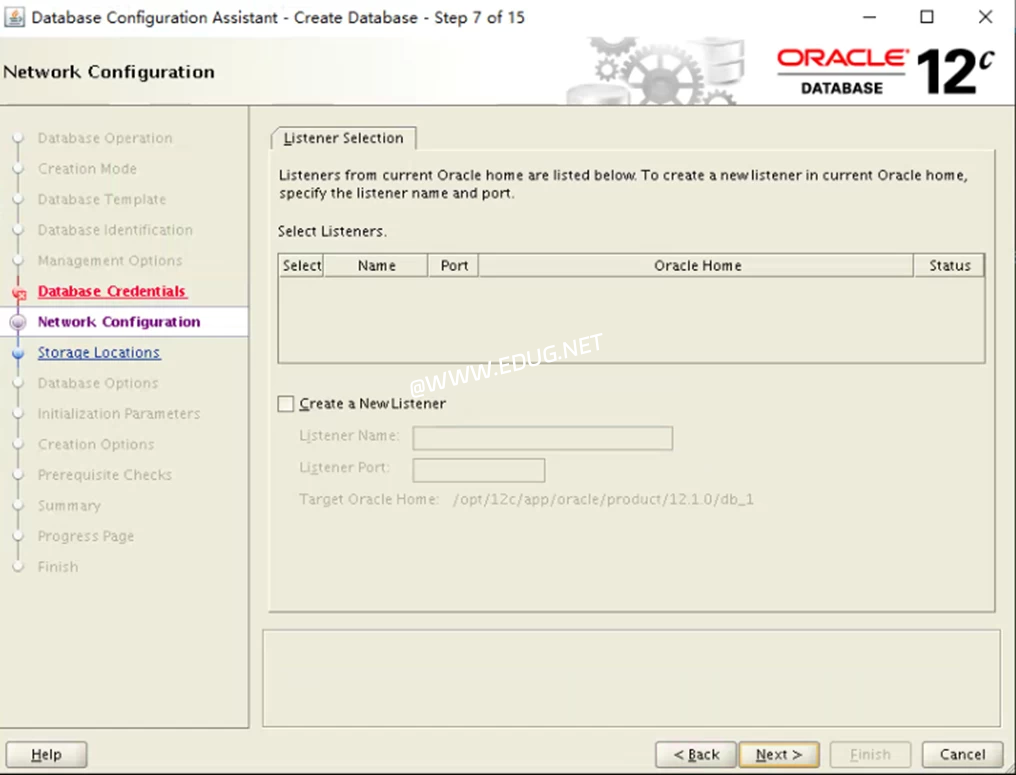

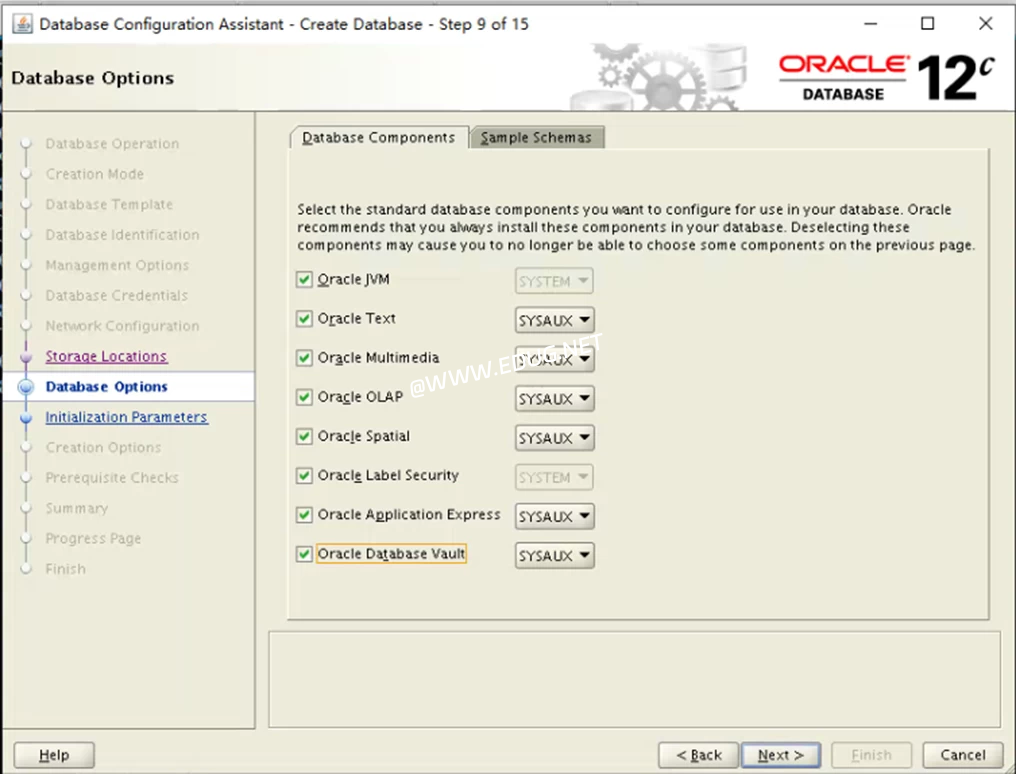

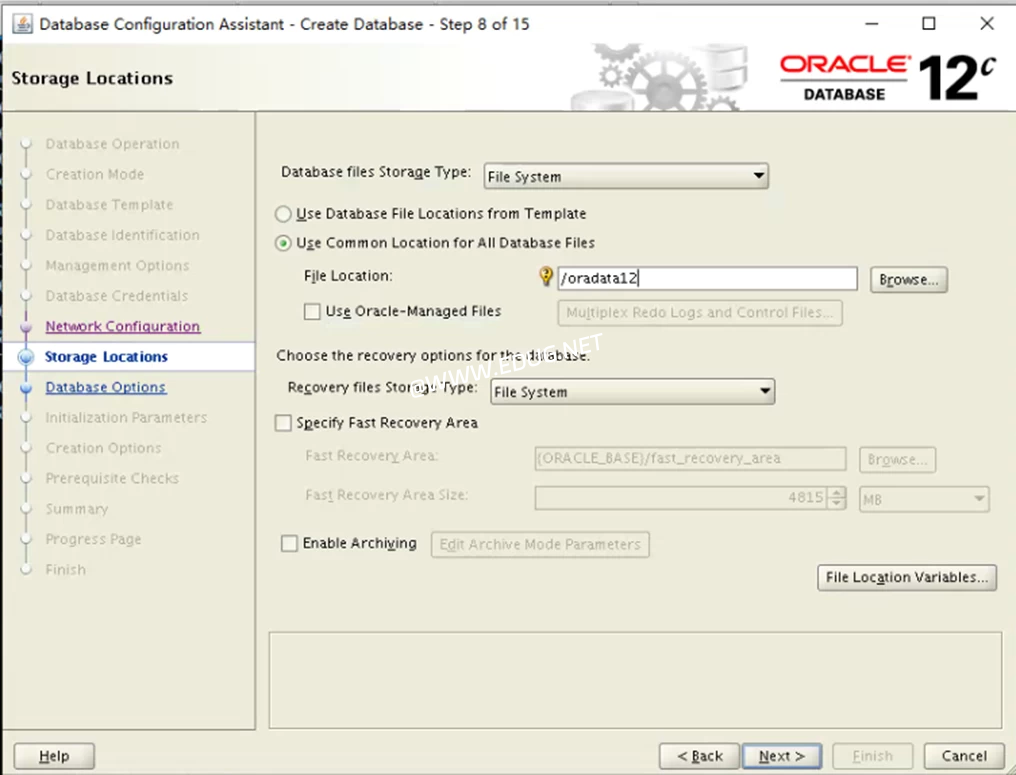

3.2.2安装软件(如截图)

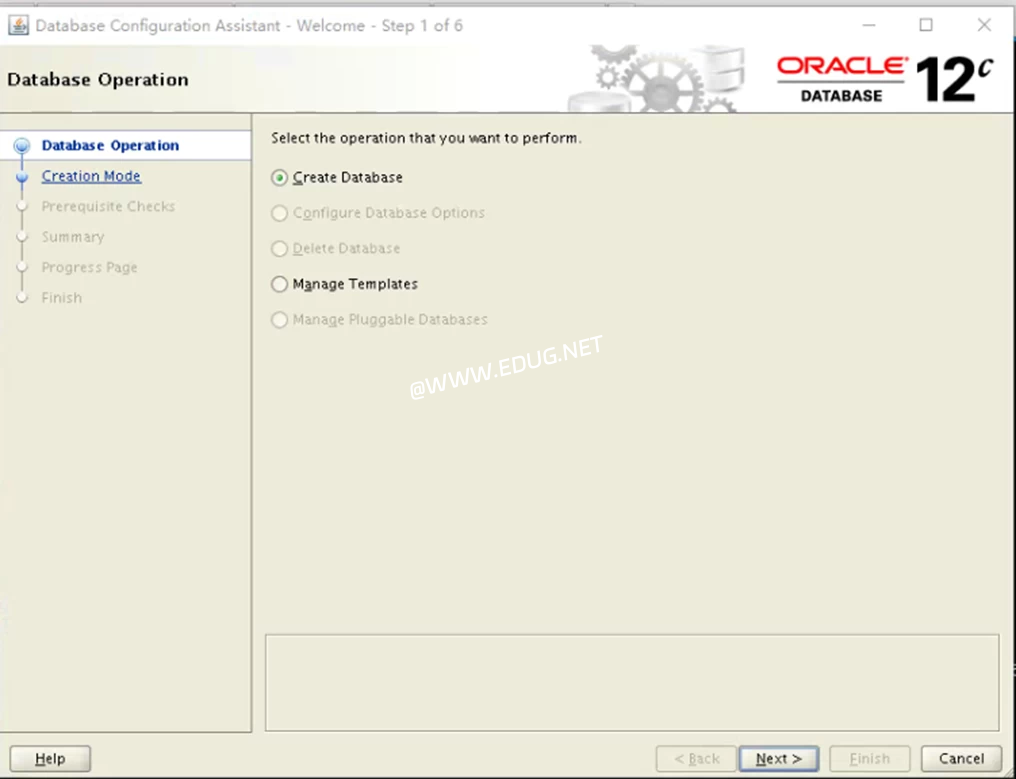

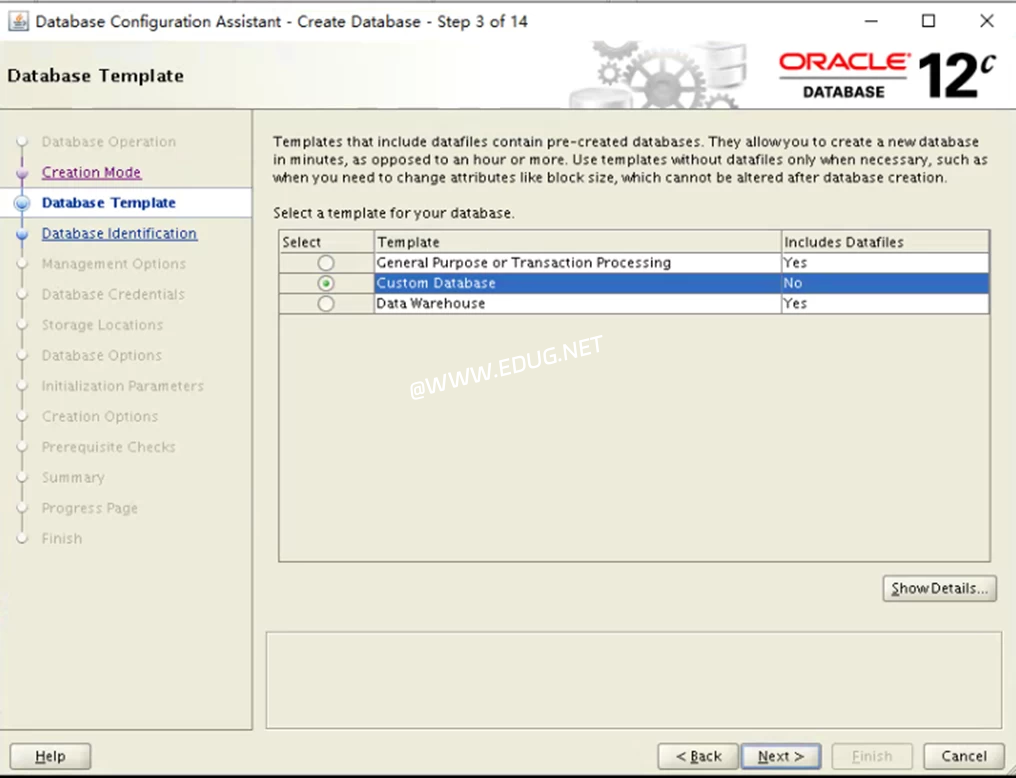

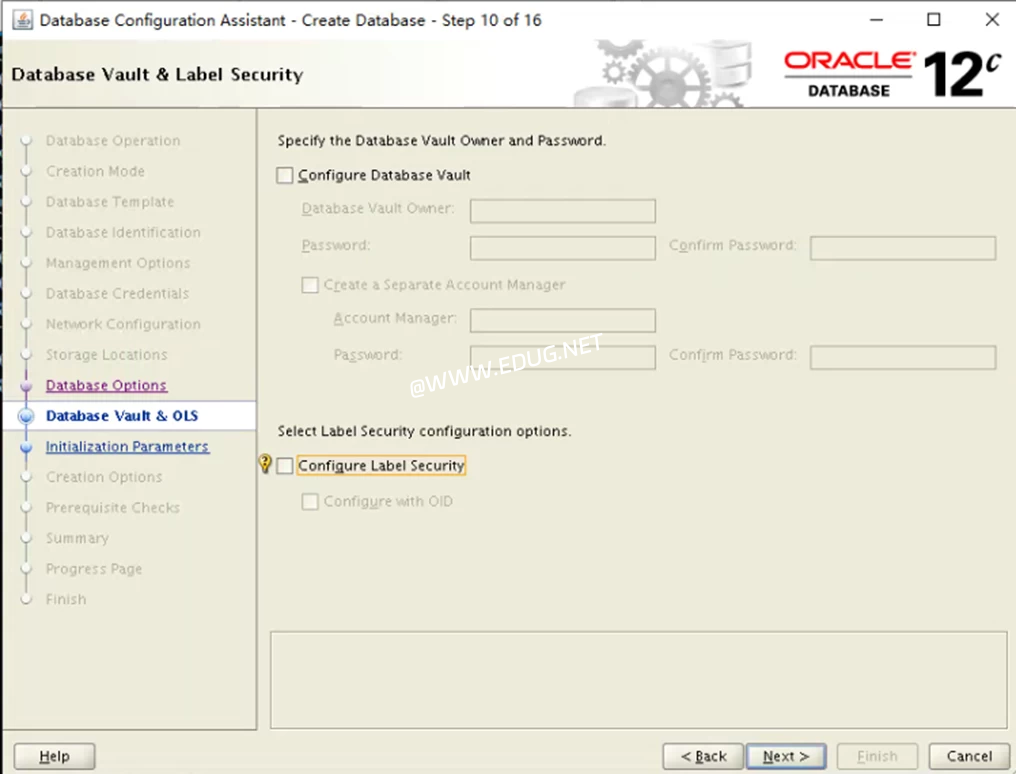

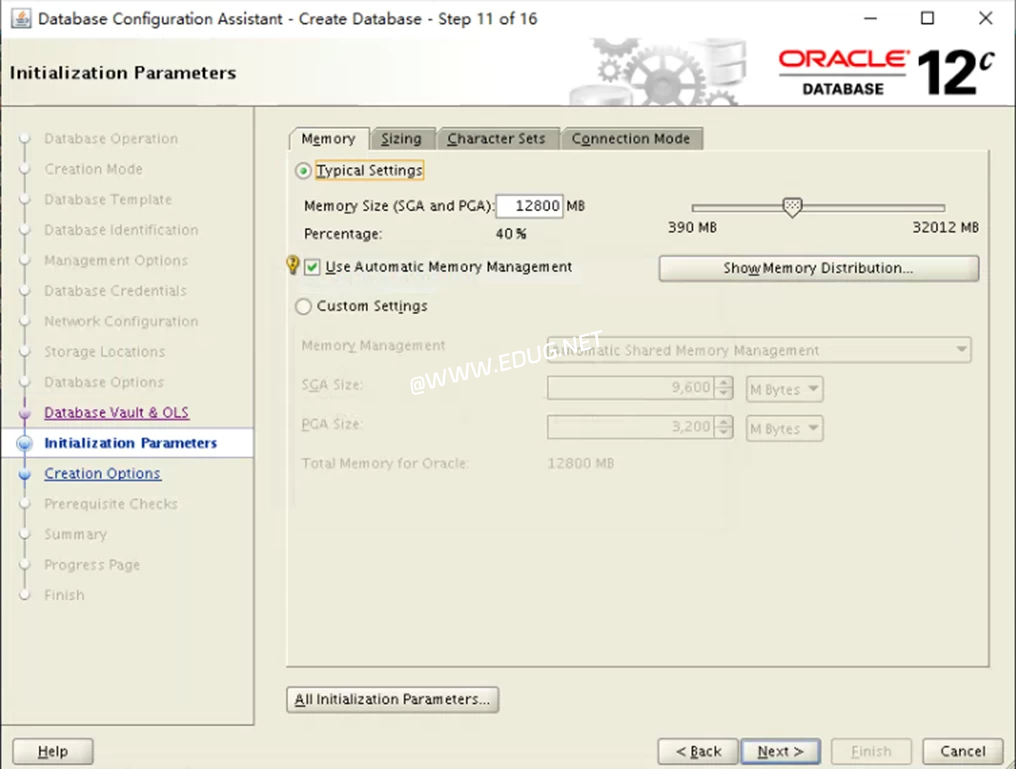

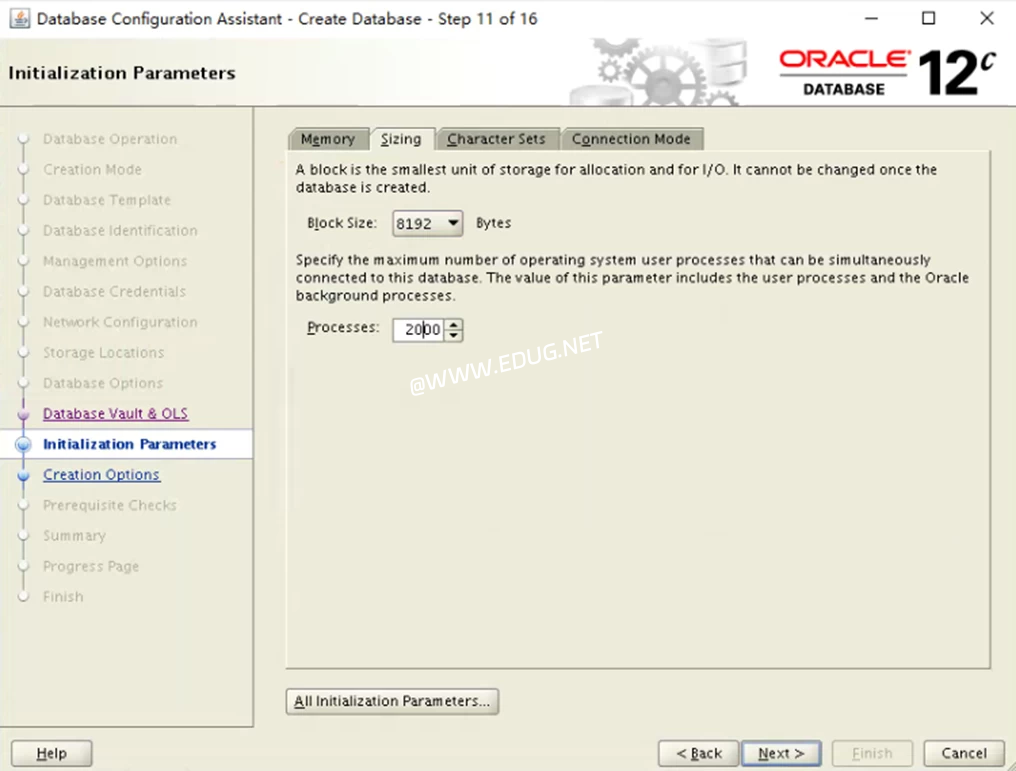

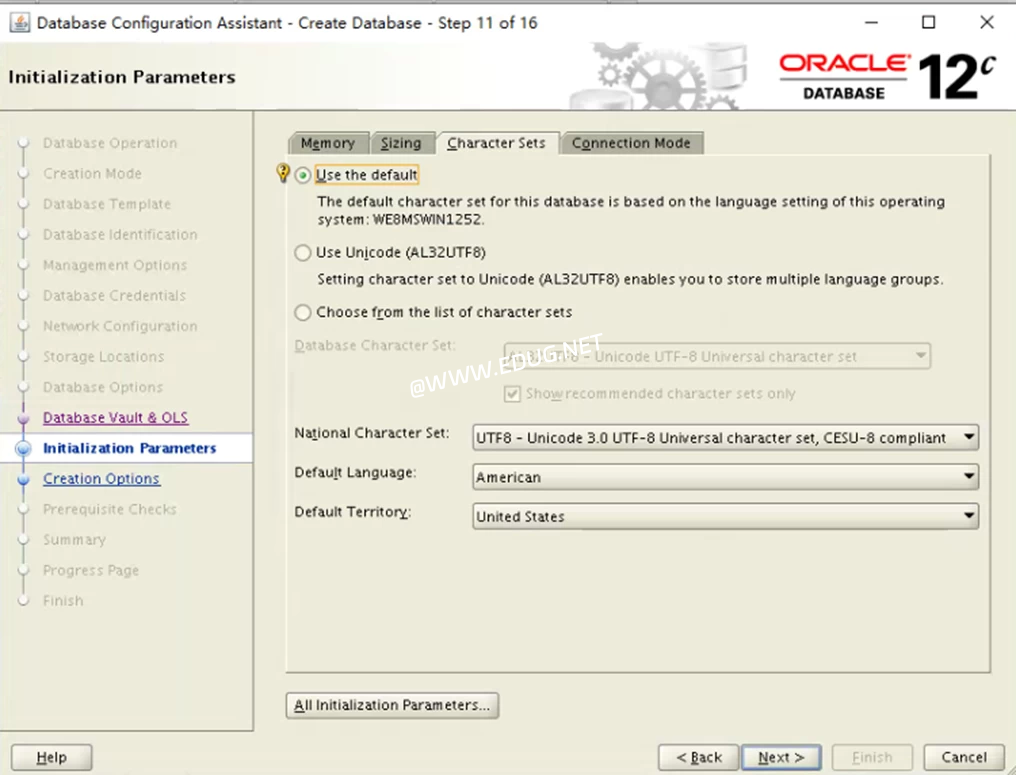

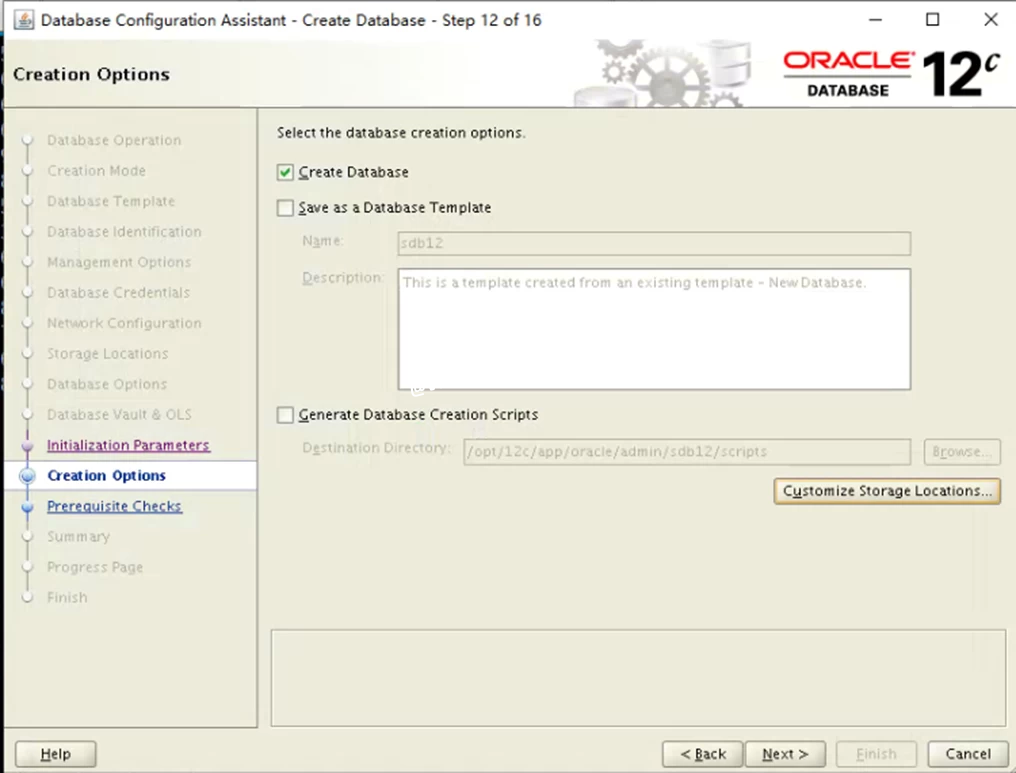

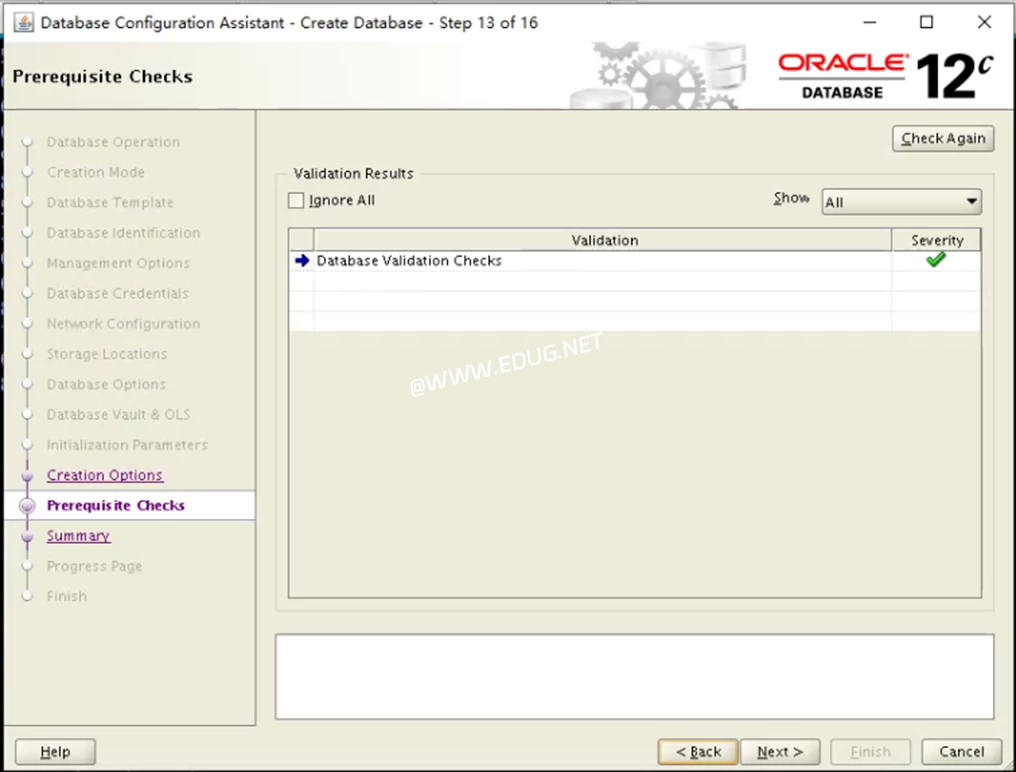

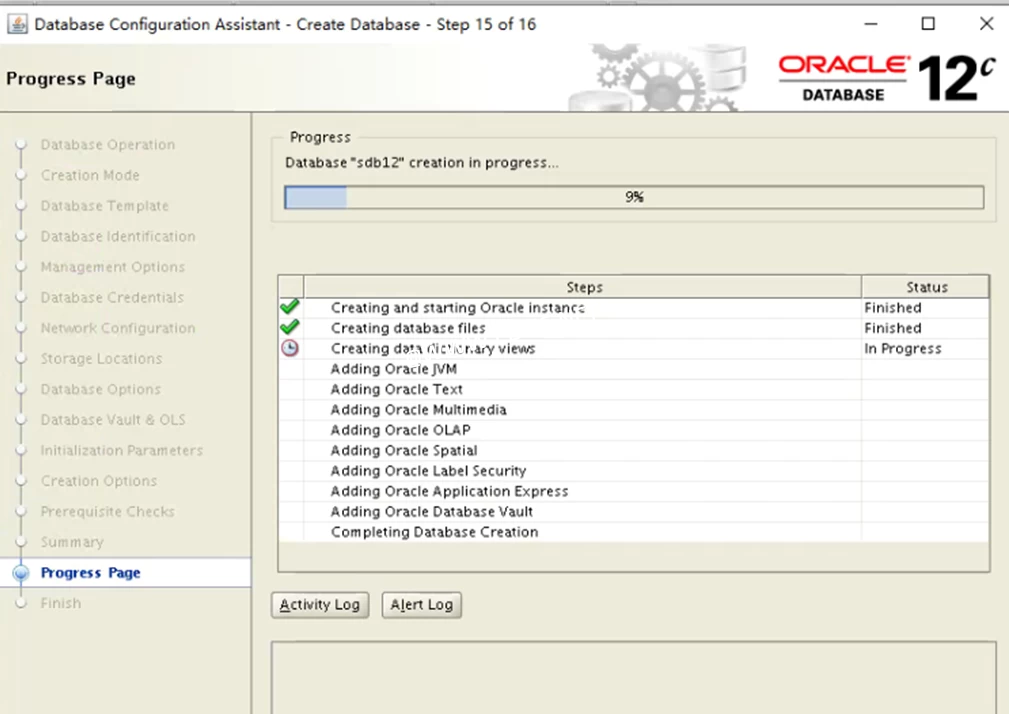

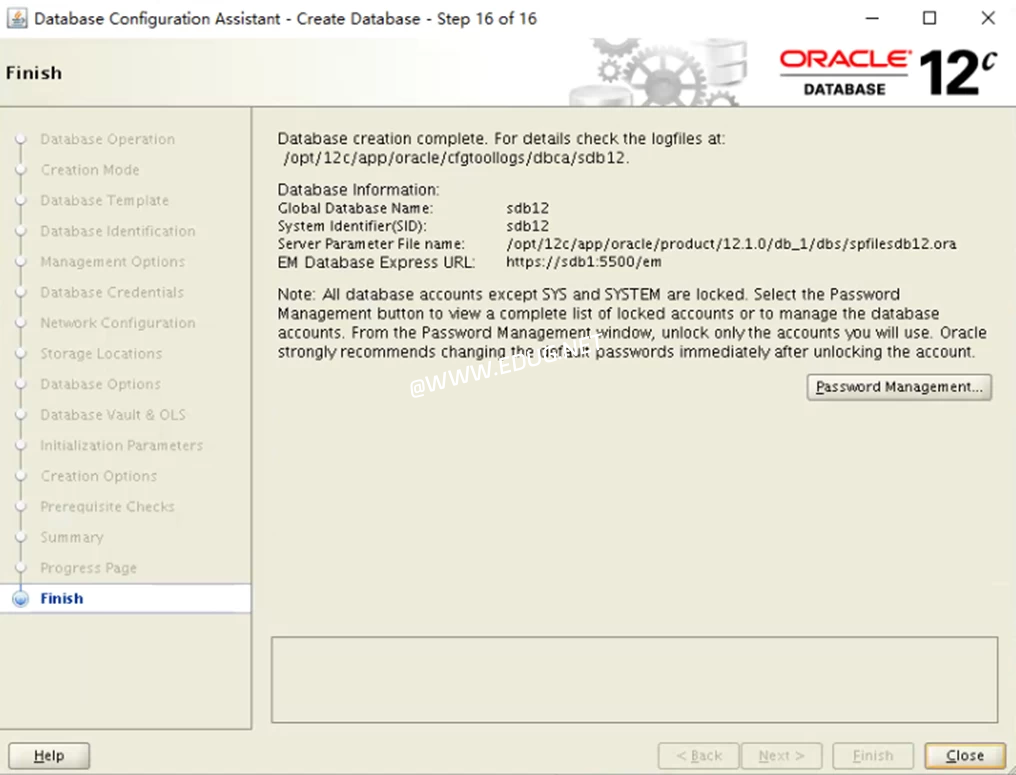

3.2.3创建数据库(如截图)

| $dbca |

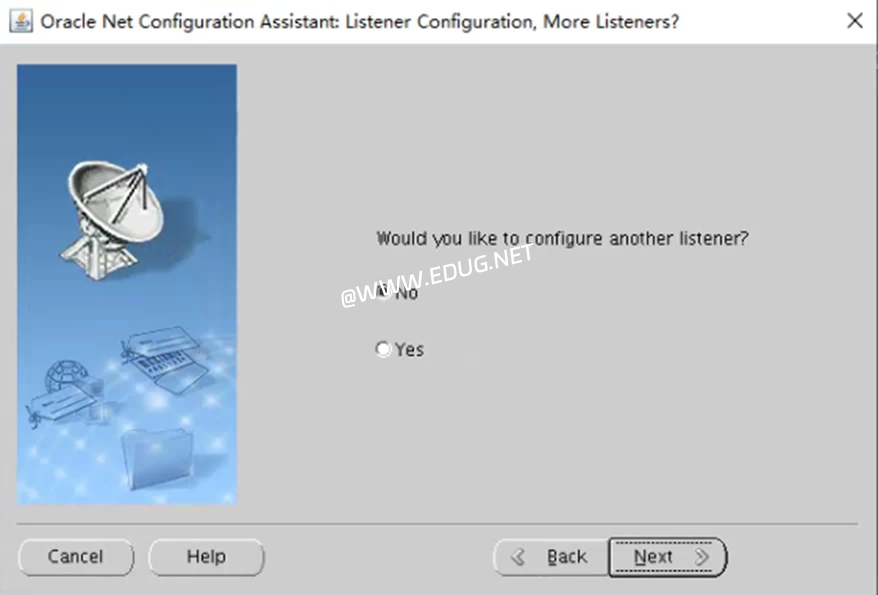

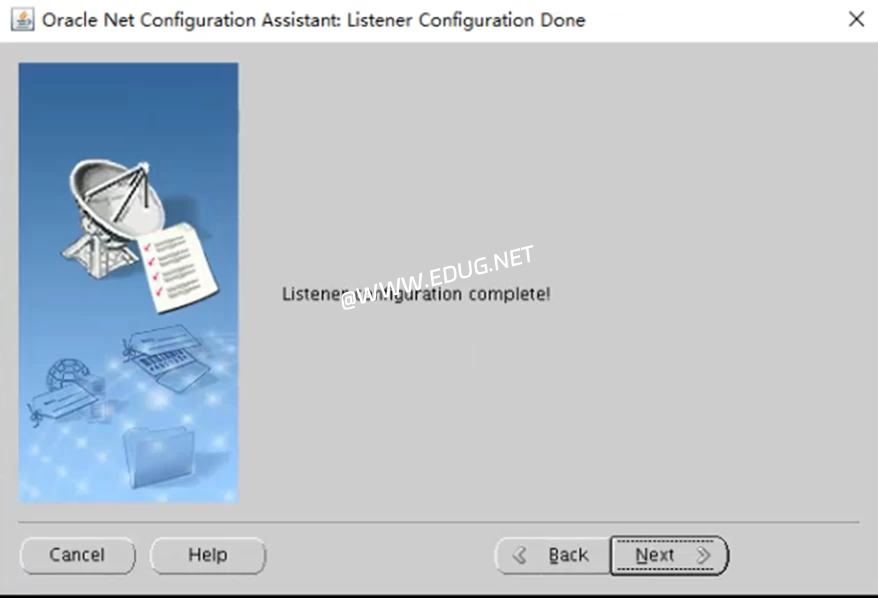

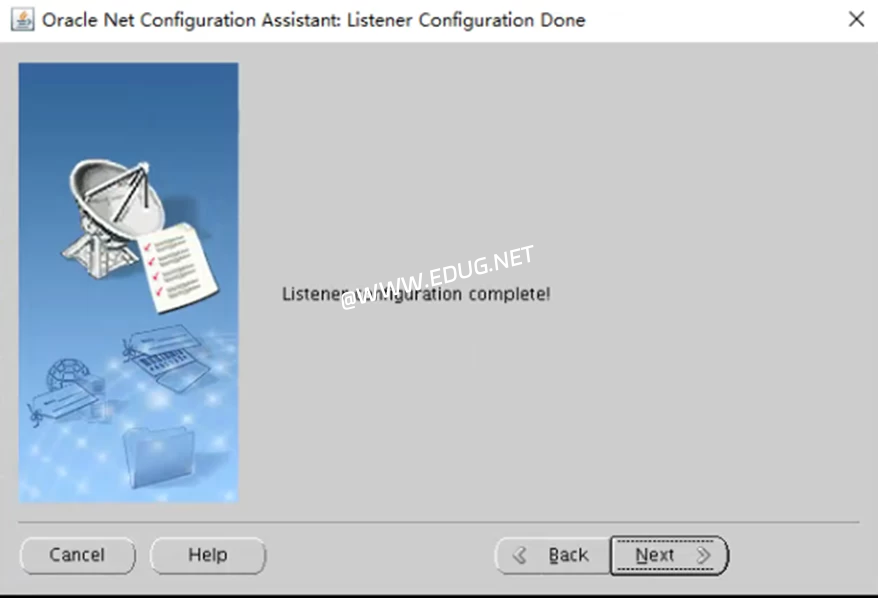

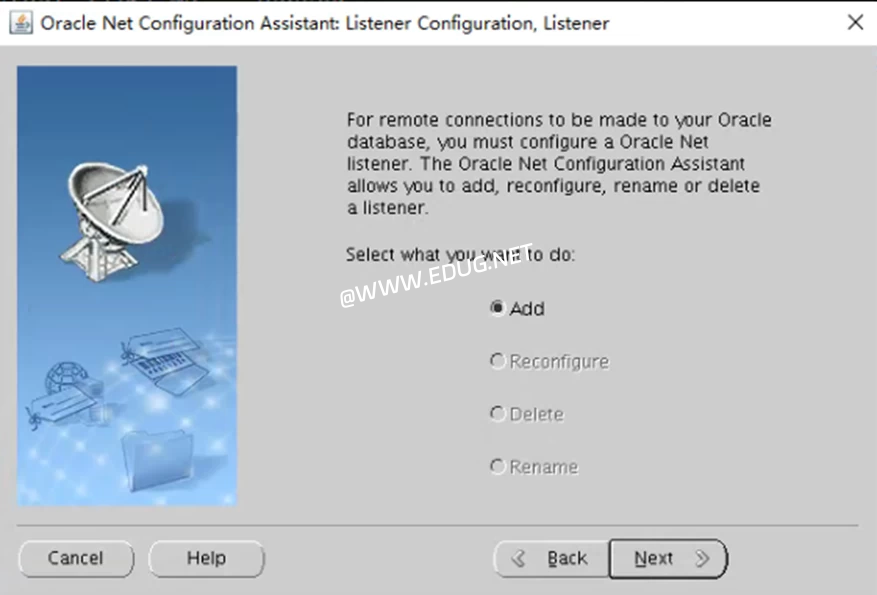

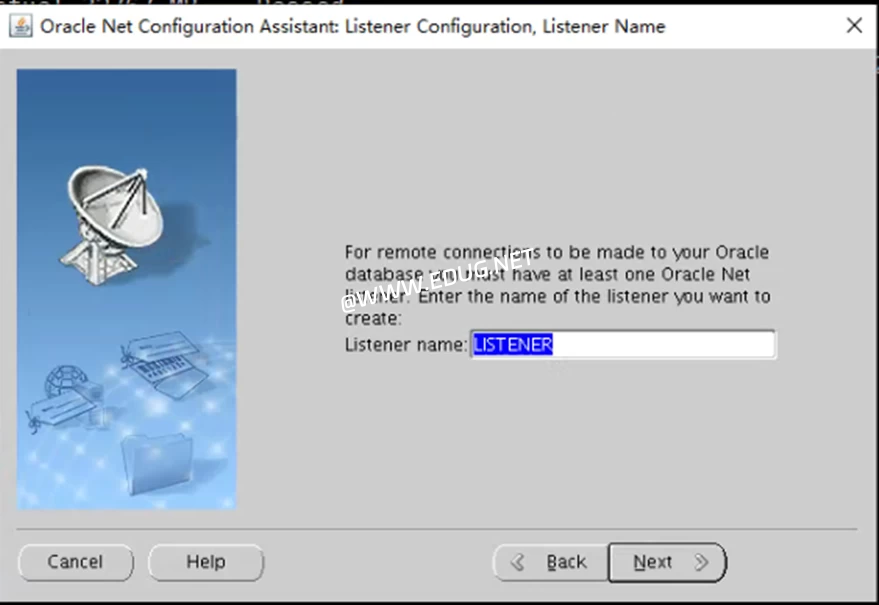

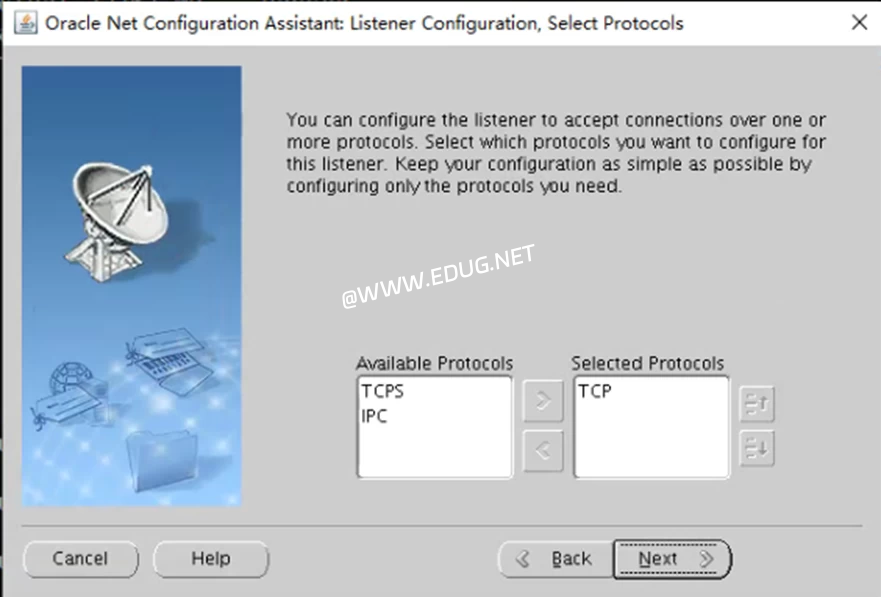

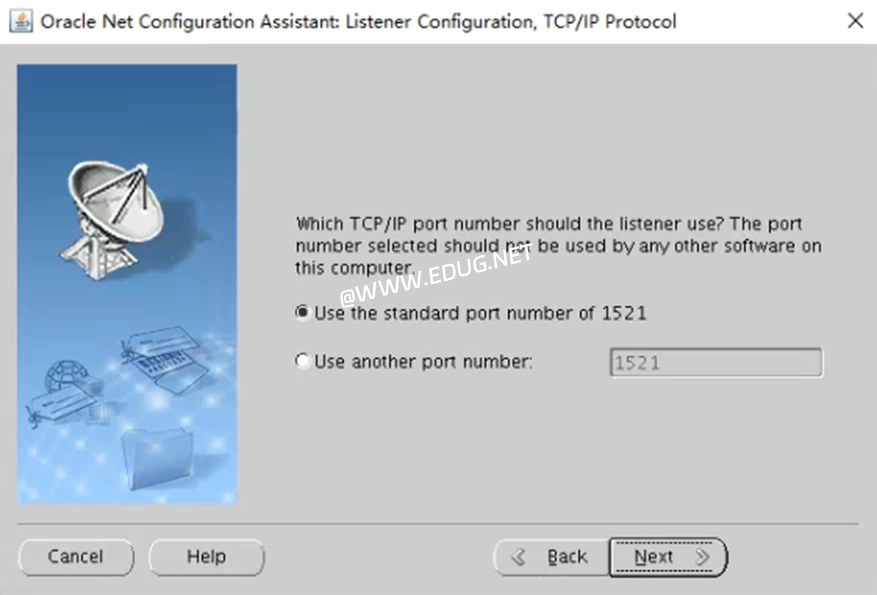

3.2.4监听创建(如截图)

3.2.4监听创建(如截图)

| $ netca |

四、 配置Oracle集群服务

配置Oracle Listener资源

| pcs resource create sdb12_lsnr ocf:heartbeat:oralsnr sid=sdb12 home=" /opt/12c/app/oracle/product/12.1.0.2/db_1" user=oracle12 op monitor interval=30 timeout=60 |

检视Oracle Listener配置

| # pcs resource show sdb12_lsnr |

检视Oracle Database资源配置选项

| pcs resource describe ocf:heartbeat:oracle |

配置Oracle Database资源

| pcs resource create sdb12_oracle ocf:heartbeat:oracle sid=sdb12 home="/opt/12c/app/oracle/product/12.1.0.2/db_1" user=oracle12 op monitor interval=120 timeout=30 |

检视Oracle Database资源配置选项

| # pcs resource show sdb12_db |

配置oracle资源置放位置与启动顺序

| # pcs constraint order start gfs2oradata12-clone then sdb12_lsnr |

| # pcs constraint order start sdb12_lsnr then sdb12_oracle |

| # pcs constraint colocation addsdb12_lsnr with gfs2oradata12-clone |

| # pcs constraint colocation add sdb12_oracle with sdb12_lsnr |

将Oracle服务加到资源群

| # pcs resource group add SDB_RESOURCE sdb12_lsnr sdb12_db |